What you need to know about AI data centers

AI companies are planning a buildout of data centers that will rank among the largest infrastructure projects in history. We examine their power demands, what makes AI data centers special, and what all this means for AI policy and the future of AI.

Published

This report accompanies our Frontier Data Center Hub.

It’s difficult to appreciate the historic scale of AI data centers. They represent some of the largest infrastructure projects humanity has ever created.

To get a sense of the scale, consider that OpenAI’s Stargate Abilene data center will need:

- Enough electricity to serve the population of Seattle1

- More than 250× the computing power of the supercomputer that trained GPT-42

- A plot of land larger than 450 soccer fields3

- $32 billion in construction and IT equipment costs

- A few thousand construction workers4

- Around two years for construction5

And that’s just a small part of the picture. Companies are currently building many other data centers like Stargate Abilene.6 By the end of 2027, AI data centers could collectively see hundreds of billions in investment — rivalling the Apollo program and Manhattan Project.

This raises many questions, such as:

- Why do we need these huge data centers?

- What makes them so exceptional as infrastructure projects?

- Where are people building them?

- How will we get the power to run them?

- And what does this all mean for the climate, AI policy, and the future of AI?

We examine each of these questions below.

Power – the most important thing to know about an AI data center

Power determines where AI data centers are built

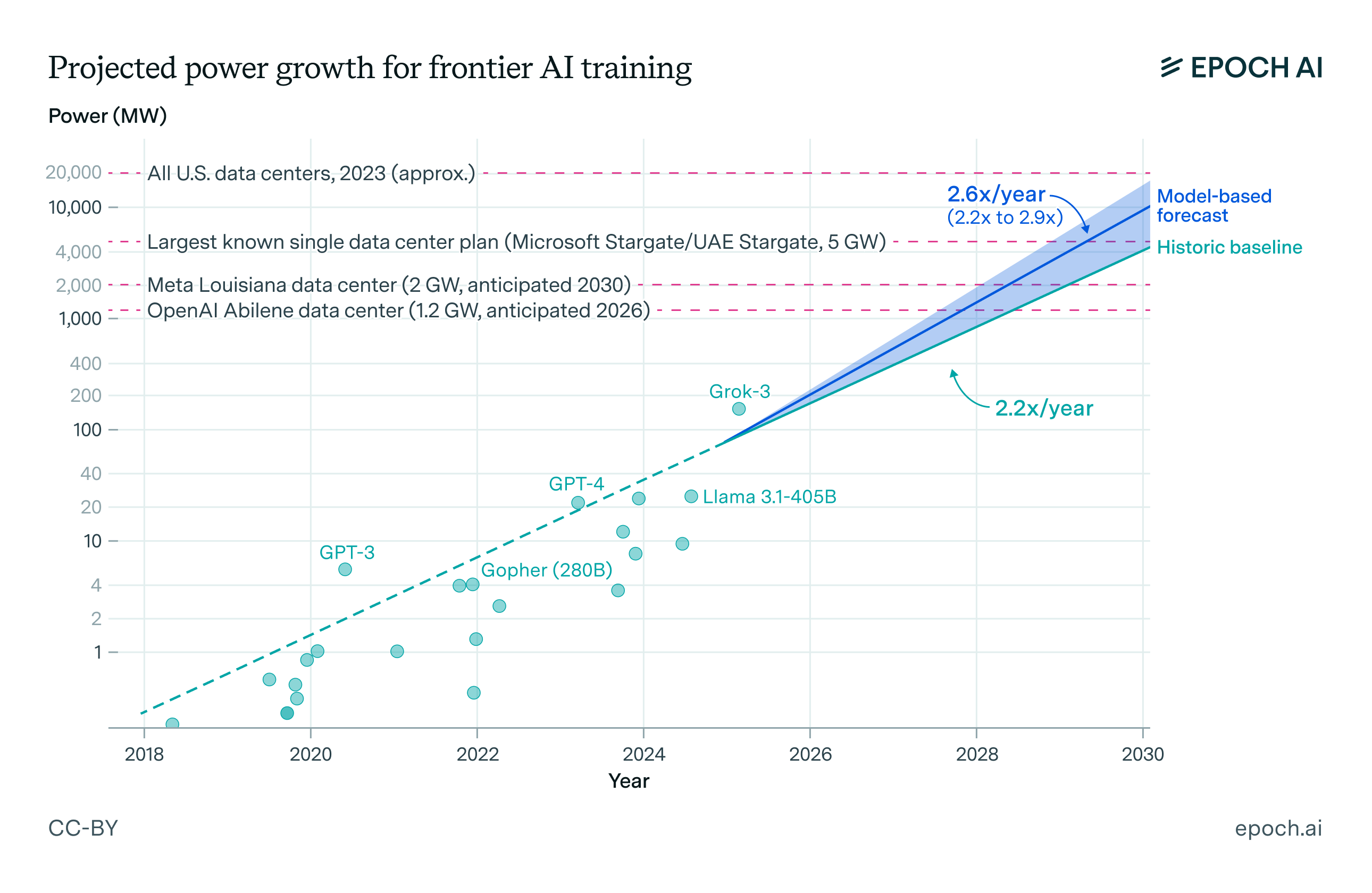

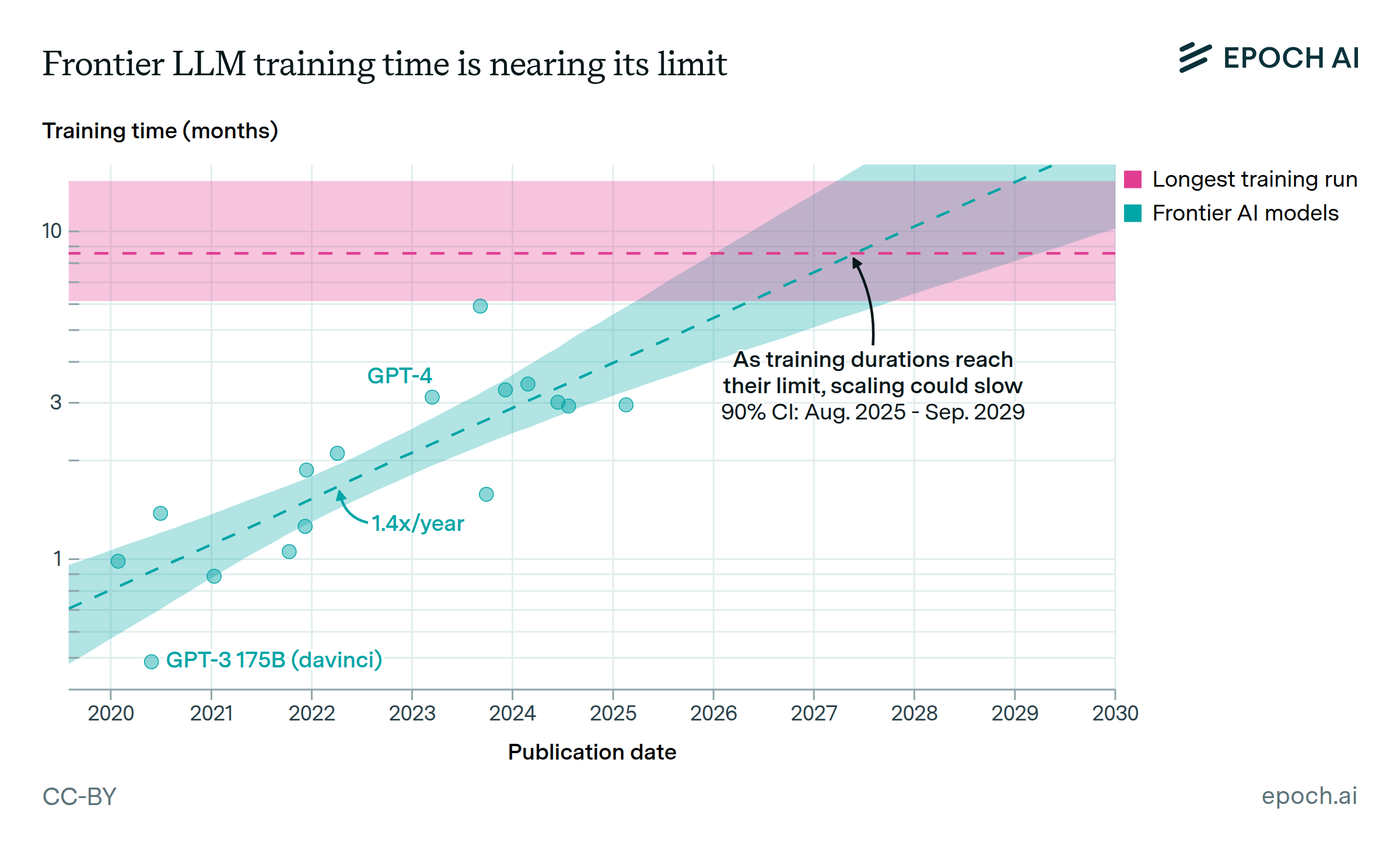

An AI data center is just a group of buildings stuffed with computers to train and run AI systems. What makes them unique is that they need to be huge to help support AI’s massive demand for computational resources — often just called “compute”. If you could somehow train xAI’s Grok 4 on a 2024 iPad Pro, it’d take you several hundred thousand years to finish.7 And the compute used to train frontier AI models grows at around 5× per year.

With so much compute, AI data centers need a ton of power. This means most AI data centers will be built in the handful of countries able to provide that power — at least over the next few years.

How much power are we really talking about? In the US, AI data centers will collectively need around 20 to 30 gigawatts (GW) of power by late 2027. To put this in perspective, 30 GW is about 5% of US average current power generation capacity and 2.5% of China’s — large but manageable fractions of these large grids.

In most other countries, 30 GW would make up an unsustainably large fraction of total power. It would be around 25% of power generation capacity in Japan, 50% in France, and 90% in the UK.8 This doesn’t stop these other countries from building out a few gigawatt-scale data centers or hosting frontier labs,9 and they may be able to grow their aggregate power supplies. But meeting AI’s power demands will likely be easier in China and the US.

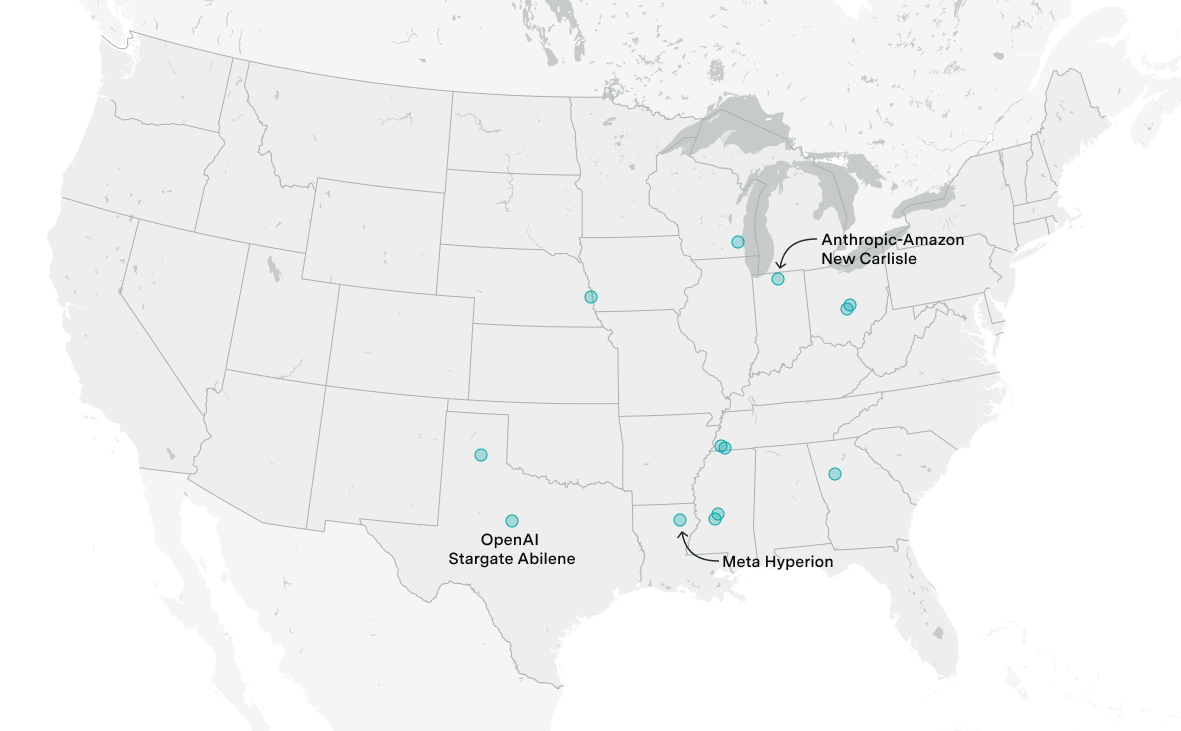

Power demand also constrains where gigawatt-scale data centers can be built within a country. In the US, companies are planning to build these facilities in states like Texas, which have abundant natural gas and less regulatory “red tape”. This makes it easier to bring gigawatts of power to a single location.

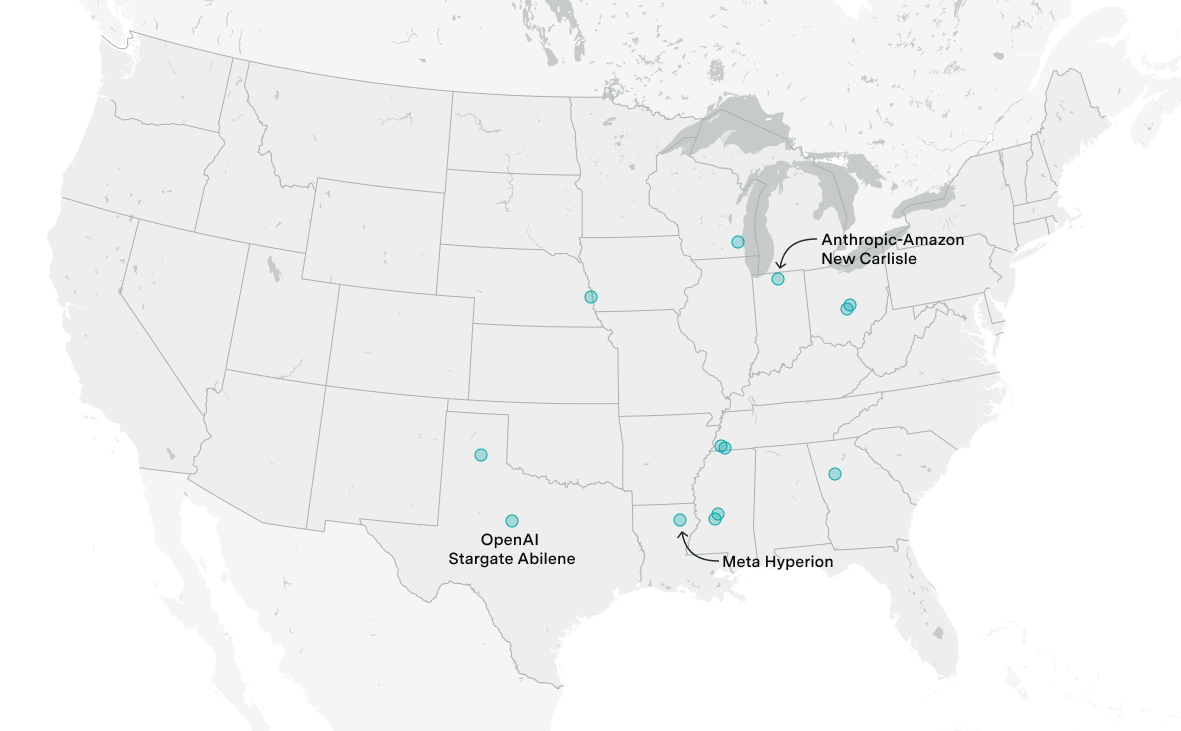

Some of the most compute-intensive AI data centers in the US are planned to be built in the Midwest and the Southern US, where power is typically easier to access. These states tend to have greater natural gas availability and less regulatory “red tape”.

Other factors tend to matter a lot less for where people build AI data centers.10 For example, some people might expect that data centers need to be located close to large cities, so that data can be quickly communicated to end users. But physical distance turns out to matter surprisingly little — models take orders of magnitude longer to process user requests than the time it takes for data to be sent across the globe. Even if a data center were placed on the Moon, model processing times might be longer than data communication times!11

There may be some use cases that need short data transfer times, such as autonomous vehicles or AI-driven video games. But in many cases, such as training and running language models, the speed of data transfer matters a lot less.

Where power comes from

Of course, if AI data centers will collectively use 20 to 30 GW by late 2027 in the US alone, the power will have to come from somewhere. But where?

For their primary power, companies connect data centers to the grid and build new power plants to support them. Often, they’ll do both for the same data center.12 For example, Stargate Abilene will initially rely on on-site natural gas power generation, then connect to the grid to scale up power supply.

When power demand spikes or main sources are offline, data centers rely on backup power sources, typically diesel.13 This ensures that AI data centers consistently have enough power, optimizing their use of huge IT equipment investments.

Many power plants use natural gas turbines,14 like those supporting OpenAI’s Stargate and Meta’s Hyperion data centers. Natural gas is especially popular because it’s relatively cheap, it can be supplied all-day, and its power plants can be constructed fairly quickly.15 This is partially because regulations make dirtier energy sources (such as coal) harder to use at scale.16

In the long run, companies plan to shift some of these data centers to more renewable energy sources and storage. For instance, Stargate will likely be increasingly interconnected with the grid, relying more on Texas’s abundant wind power. Another example is the Goodnight data center, which is to be located near a wind farm in Claude, Texas (no relation to Anthropic’s Claude).

Of course, these plans may be abandoned,17 and it’s not always obvious what the plans entail.

For example, Microsoft intends to build a 250 megawatt solar project as part of its plans for its Mount Pleasant data center. But the solar power isn’t for the data center itself – it’s to match the power from its primary natural gas with additional power that’s “carbon-free”.

What’s so special about AI data centers?

AI data centers have exceptionally high power densities

All this power is used to run IT equipment, which is by far the biggest source of energy and the biggest expense in the whole data center. But it’s not just the total power that matters — what makes AI data centers exceptional is they use a lot of power in a small volume. That is, they have very high power densities.

The reason for the exceptional power density comes from the structure of AI data centers. To help computer chips like GPUs and CPUs rapidly communicate data with each other, they’re packed close together in vertical cabinet-like “server racks”. Dozens of GPUs can be packed into a rack — for instance, NVIDIA’s industry-standard NVL72 GB200 holds 72 GPUs.18

An NVIDIA NVL72 server rack holds 72 Blackwell GPUs, which are arranged close together in rows on the rack. Source: Travis P Ball/Sipa USA via Reuters Connect

But packing IT equipment close together comes at a cost. As the above image shows, each rack isn’t very big — it’s a little over 2 meters tall, and its cross section is around 0.5 square meters. Within that small volume, the processors use over 100 kilowatt (kW) of power — enough to power 100 American homes!

This leads to the enormous power densities that really makes AI data centers exceptional. Data centers that aren’t used for AI commonly use 10 kW per rack, around ten times less than the NVL72 rack.

Huge power densities call for unique cooling systems

With this huge power density, IT equipment starts to overheat, hurting performance. So data centers are designed to keep the equipment cool — but how?

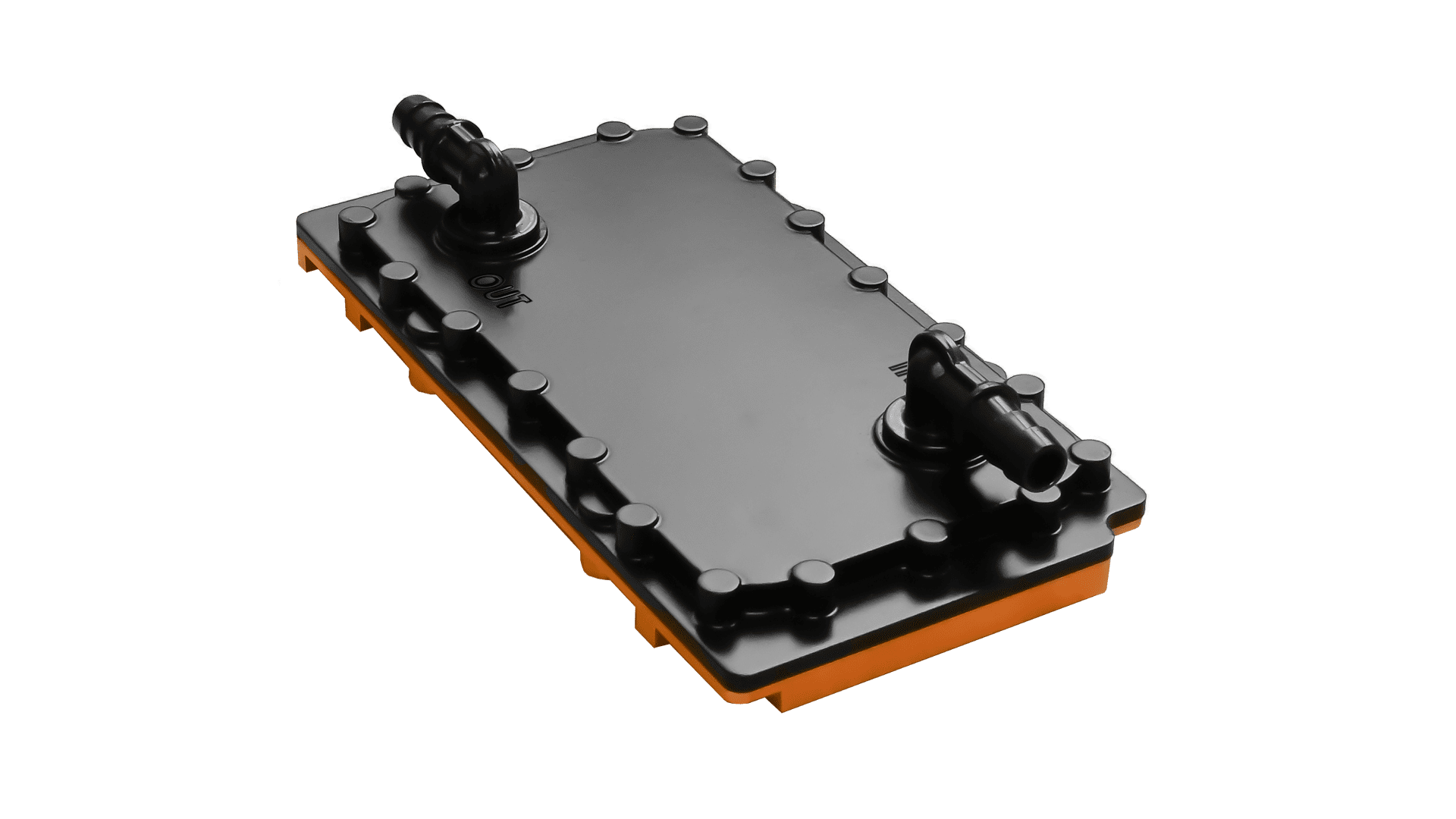

Modern AI data centers use liquid cooling — allowing a liquid coolant to flow through metal plates mounted onto IT equipment, transferring heat to the coolant. The coolant then exits the server rack and transfers the heat to water, which flows out of the IT rooms before expelling the heat to the outdoors using specialized cooling equipment.

Example of a plate that’s mounted onto a GPU to help with liquid cooling. Coolant flows in through one outlet and out the other, before transferring heat to water in the main cooling infrastructure of the data center. Source: jetcool

There are two common ways to expel heat from the water.19 The first is to use a water-cooled chiller with an evaporative cooling tower. The chiller acts like a refrigerator, moving heat from the water inside to another loop of water that flows outside, into a cooling tower. The cooling tower then exposes the hot water to outside air. Finally, the water evaporates to produce a cooling effect, in the same way that our sweat evaporates to cool our bodies down.

The second approach is to use an air-cooled chiller. Like water-cooled chillers, air-cooled chillers use refrigeration to move heat from the data center water to the outside air. However, air-cooled chillers suck air directly over the refrigerator coils to cool them down, rather than using an outer loop of water. Much more water can be reused with this approach, since water isn’t evaporated away.

Data centers with much lower power densities don’t need this sophisticated set-up. Instead, they typically rely on large fans and air conditioners to manage heat. But since air isn’t ideal for efficiently “soaking up” heat, these methods won’t cut it in AI data centers.20 Liquid coolants and water are far superior at removing heat in these conditions, which is why people often say that AI data centers use a lot of water.

What does this all mean for AI progress and policy?

AI’s broad climate impact isn’t very big (yet)

Since AI data centers use a lot of power and water, are they having a big negative environmental impact?

Realistically, AI hasn’t yet used enough power and water to have broad climate effects. While AI data centers collectively use 1% of total US power,21 this is still substantially less than air conditioning (12%) and lighting (8%).

The story is similar for water. While US data centers directly used around 17.4 billion gallons of water in 2023, agriculture used closer to 36.5 trillion gallons — around 2,000× higher.22

Nevertheless, AI data centers could have significant local effects in regions with less energy availability. And in time, AI data centers might start contributing to climate change in a significant way, if they continue to rely on fossil fuels. After all, we project AI data centers could demand 5% of total US electricity generation in 2027, and the data center buildout could continue from there.

Overall, we think it’s very unlikely that AI has had broad effects on the climate thus far — but if trends continue, this could change within the next decade.

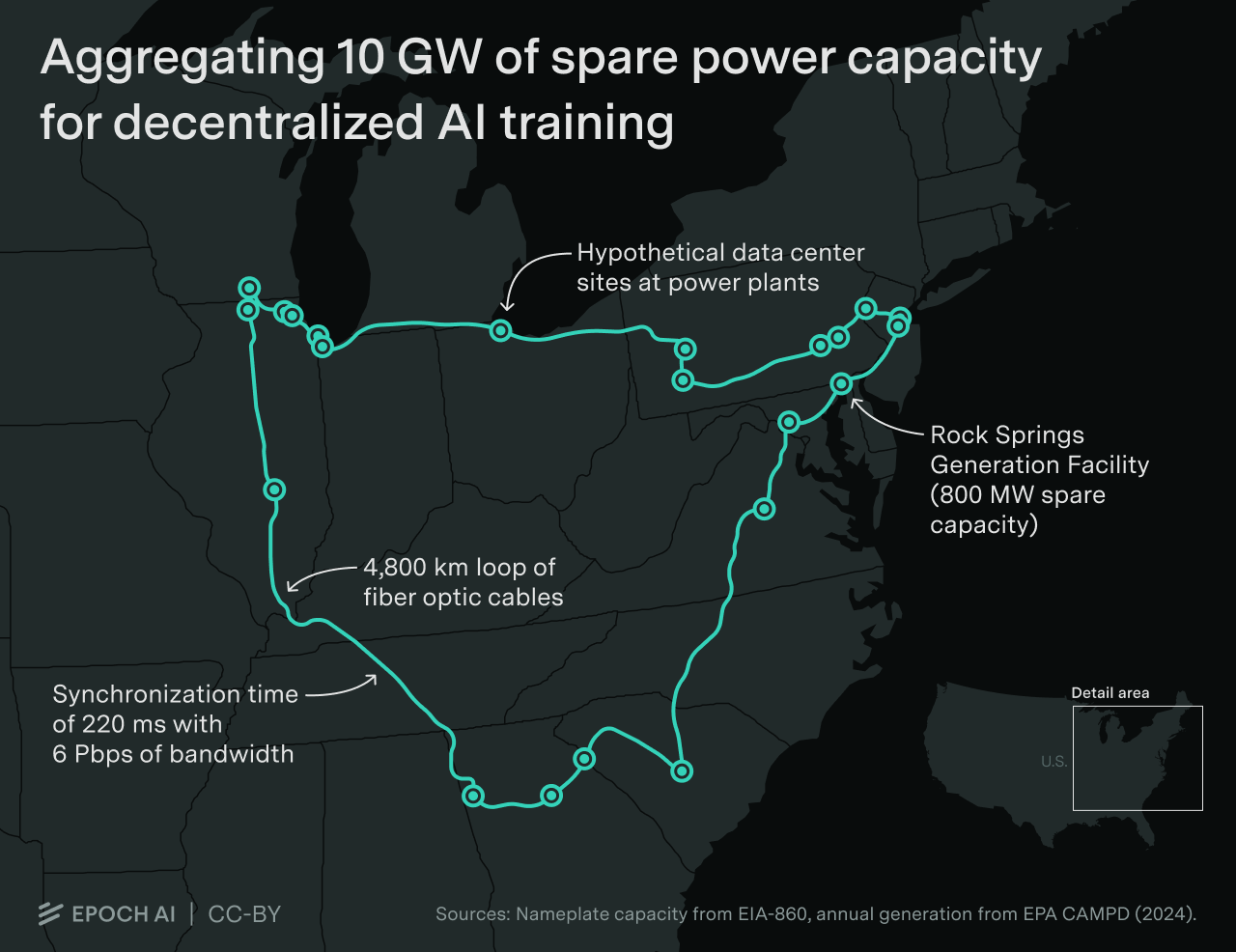

Companies probably won’t need to decentralize AI training over the next two years

Data centers are growing fast enough that we don’t need decentralized training — at least for the next two years.

On current trends, the largest AI training run in two years will need around 2.5 million H100 equivalent GPUs.23 At the same time, we estimate that OpenAI/Microsoft’s Fairwater data center will have double this compute capacity. So there’s enough compute to keep scaling training in a single data center.

But it’s not clear that companies will do this in practice. On the one hand, single data center training might reduce construction costs.24 It might also make training simpler — for example, networking across multiple data centers introduces more points of failure that can reduce training run reliability. On the other hand, decentralized training across different locations makes it easier to soak up excess power capacity.

Our best guess is that centralized training will continue being the norm for the next two years, given that data centers are growing fast enough to enable this. But it’s far from clear that this will be the case.

Gigawatt-scale AI data centers are hard to secure

Relatedly, there’s been much discussion of AI data center security. Some fear that sensitive information about data centers could be leaked, and it’s challenging to prevent this entirely. As we saw earlier, gigawatt-scale data centers often involve thousands of people in construction from multiple organizations. Vetting everyone is hard!

It’s also hard to hide the location of data centers because their extensive cooling systems are large and hard to miss — they can even be identified using satellite imagery.25 If you see a building with a bunch of giant chillers next to it, there’s a good chance it’s a data center.

For example, if we look at satellite images of Stargate, we can very clearly see the cooling equipment surrounding data center buildings as they are brought into operation:

Zooming in on OpenAI’s Stargate Abilene data center, we see rows of cooling equipment (in black) surrounding data center buildings (in white/orange). Source: from Airbus, delivered by Apollo Mapping.

And this means the common analogy between AI development and the “Manhattan Project” is somewhat inapt — it’s substantially harder to keep a project of this kind of scale secret from the rest of the world.

Conclusion

Data centers will be key to AI progress over the next few years, so it’s crucial that we understand them well. The core dynamic is scale, and the most important metric to pay attention to is power. Power plays a major role in determining where data centers are built, which countries can keep up with frontier AI development, and makes it hard to hide data centers from the rest of the world.

But of course, there are still many open questions. For example, after data centers are constructed, we often don’t know for sure who’s using the compute inside of them. And we also don’t know what actions different countries will take — for example, how will countries without much power capacity orient to massive AI data center buildouts?

For these questions, we’ll have to wait and see.

We’d like to thank Josh You, Jaime Sevilla, David Owen, Clara Collier, David Atanasov, Isabel Juniewicz, and Cody Fenwick for their feedback on this post.

-

Seattle uses around 9 million MWh of energy each year (see pg. 96 of the linked report), which corresponds to (9 × 1012) / 365 / 24 = 1 GW of power on average. ↩

-

GPT-4 was likely trained on a cluster of 25,000 A100 GPUs, each of which does around 3 × 1014 FLOP/s, or around 7.5 × 1018 FLOP/s in aggregate. In contrast, Stargate Abilene’s cluster is estimated to have 2 × 1021 FLOP/s in compute capacity, which is over 250× higher. ↩

-

Each soccer field is 0.00714 square kilometers. OpenAI’s Stargate facility in Abilene is built on a 875-acre site, or 3.5 square kilometers. ↩

-

In July 2025, OpenAI’s Stargate data center had around 3,000 workers on-site. ↩

-

Crusoe plans to grow their Abilene data center campus to 1.2 GW two years after the beginning of construction. It’s plausible that this could be substantially accelerated — xAI’s Colossus 1 cluster was reportedly constructed in 122 days, though they were told that it would take two years to complete. ↩

-

We’ll likely see the world’s first gigawatt-scale AI data center early next year. The main candidates for this are Anthropic-Amazon’s Project Rainier, xAI’s Colossus 2, Microsoft’s Fayetteville, Meta’s Prometheus, and OpenAI’s Stargate. ↩

-

Apple’s 2024 iPad Pro has a “neural engine” specialized for AI, which performs around 38 trillion operations per second. In contrast, xAI’s Grok 4 model was trained on around 500 trillion trillion operations. So in total you’d need around 400,000 years of training! In practice this likely wouldn’t even be possible because of memory constraints, but this calculation illustrates just how compute-intensive it can be to train frontier AI models. ↩

-

These estimates are based on the reported electricity generation in each of these countries in 2024. ↩

-

The largest planned data center we know of is Microsoft Fairwater, which we estimate will need 3.3 GW of power. This is about a tenth of the aggregate 30 GW estimate, so it would demand about 0.5% of power in the US, 0.25% in China, 2.5% in Japan, 5% in France, and 9% in the UK. This is more manageable at an aggregate level, but it would still be challenging to supply this power to a small region. ↩

-

Other factors include the slope of the terrain, whether the land is federally-owned, and the demand for “sovereign AI compute”. ↩

-

To see why, suppose you’re running a language model in Texas, and we want to see how long it takes to reach users in New York and Tokyo. If signals can be transferred at two-thirds of the speed of light (i.e. 200,000 km/s), and the distance between Texas and New York is around 2,500 km, then it takes around 2,500 km / (200,000 km/s) = 12.5 milliseconds for responses to reach users. The distance between Texas and Tokyo is around 10,000 km, so the travel time is around four times longer. In contrast, current frontier models generate around 100 words per second, so it’d take on the order of 10 seconds to generate a typical response of around 1,000 words, almost a thousand times longer than 12.5 milliseconds. Even if we placed a data center on the moon, the latency would still only be around 2 seconds. ↩

-

Yet another approach could be used when AI developers are desperate for power. It is called “data center flexibility”, which involves significantly reducing a data center’s load around 1% to 5% of the time during peak power demand, in exchange for access to power that wouldn’t otherwise have been available. Power suppliers benefit from this arrangement, because it reduces the risk of blackouts and reduces strain on the local grid. ↩

-

For example, plans for one of Anthropic’s Project Rainier data centers include 238 “diesel-fired critical emergency generators”. ↩

-

Natural gas turbines burn natural gas in compressed air to turn a turbine, which helps generate electricity. ↩

-

The US Energy Information Association reports around 20 examples of power plants with different energy sources, and the cheapest plants per kilowatt use natural gas (the full set of numbers can be found in this spreadsheet). ↩

-

For example, although diesel is a common source of backup energy, it generally hasn’t been used very much at AI data centers. This is likely due to Title V of the Clean Air Act, which subjects facilities emitting more than 100 tons of pollutants per year to major permitting requirements. In a data center with gigawatt-scale generators, these limits may be reached in just a few hours of operation, effectively restricting diesel to short-term backup roles. That said, it is sometimes possible to circumvent these kinds of permitting bottlenecks. For instance, xAI may have deployed a bunch of natural gas turbines prior to receiving permits. ↩

-

There’s already some precedent for plans changing — for example, Crusoe’s Goodnight data center recently scaled back its construction plans from seven buildings to as few as three or four. ↩

-

Note that this contains 36 “Grace Blackwell Superchips”, each of which consists of two Blackwell GPUs and one Grace CPU. ↩

-

A third approach is to “pour the hot water into the river”, but this can harm local aquatic wildlife and is regulated under the Clean Water Act in the US. ↩

-

As an analogy, you can cool a hot saucepan much faster by pouring cold water onto it compared to blowing cold air onto it! ↩

-

Currently, AI data centers collectively use around 5 GW of power in the US, compared to around 500 GW in total power capacity in the country. ↩

-

Agriculture used 100 billion gallons of water a day in 2023, or 36.5 trillion gallons of water over the year. ↩

-

Grok 4 was trained on about 100,000 H100 equivalent GPUs, and training compute grows at 5× per year in frontier models. So within two years we’ll need a cluster of around 2.5 million GPUs. In practice the number could be lower than this, since training runs could get longer over time. ↩

-

It might be cheaper to build (say) a single 5 GW data center than five 1 GW ones in different locations. For example, if you build in a single location, you only need to get regulatory approval for that one place, whereas you need to do so multiple times for multiple locations. ↩

-

It’s important to expose cooling systems to the atmosphere, such as to release water vapor or expel hot air. Since the power density is so high, cooling equipment has to go outside, rather than just blowing air out of the sides of the building. ↩