Introducing the Frontier Data Centers Hub

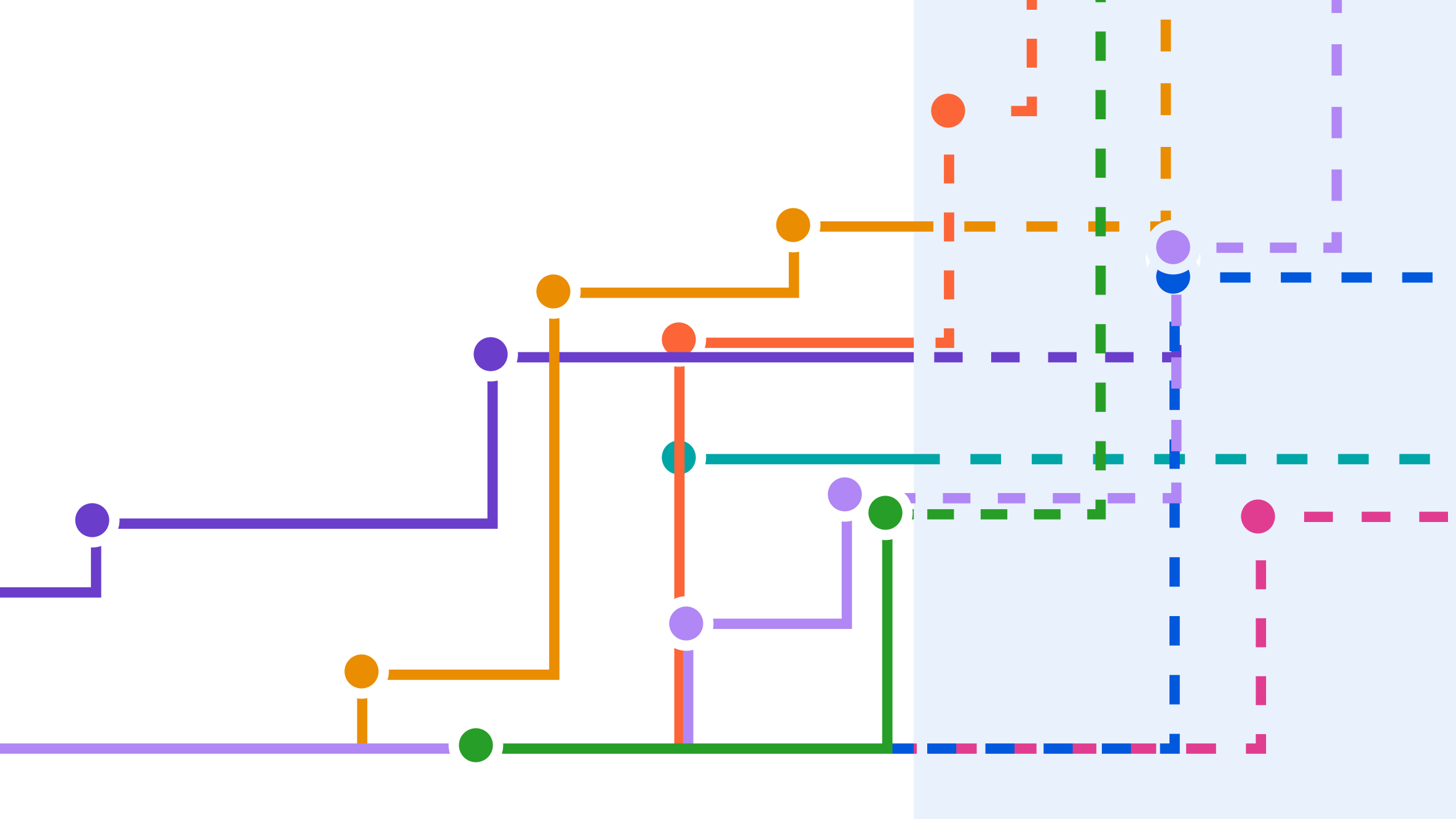

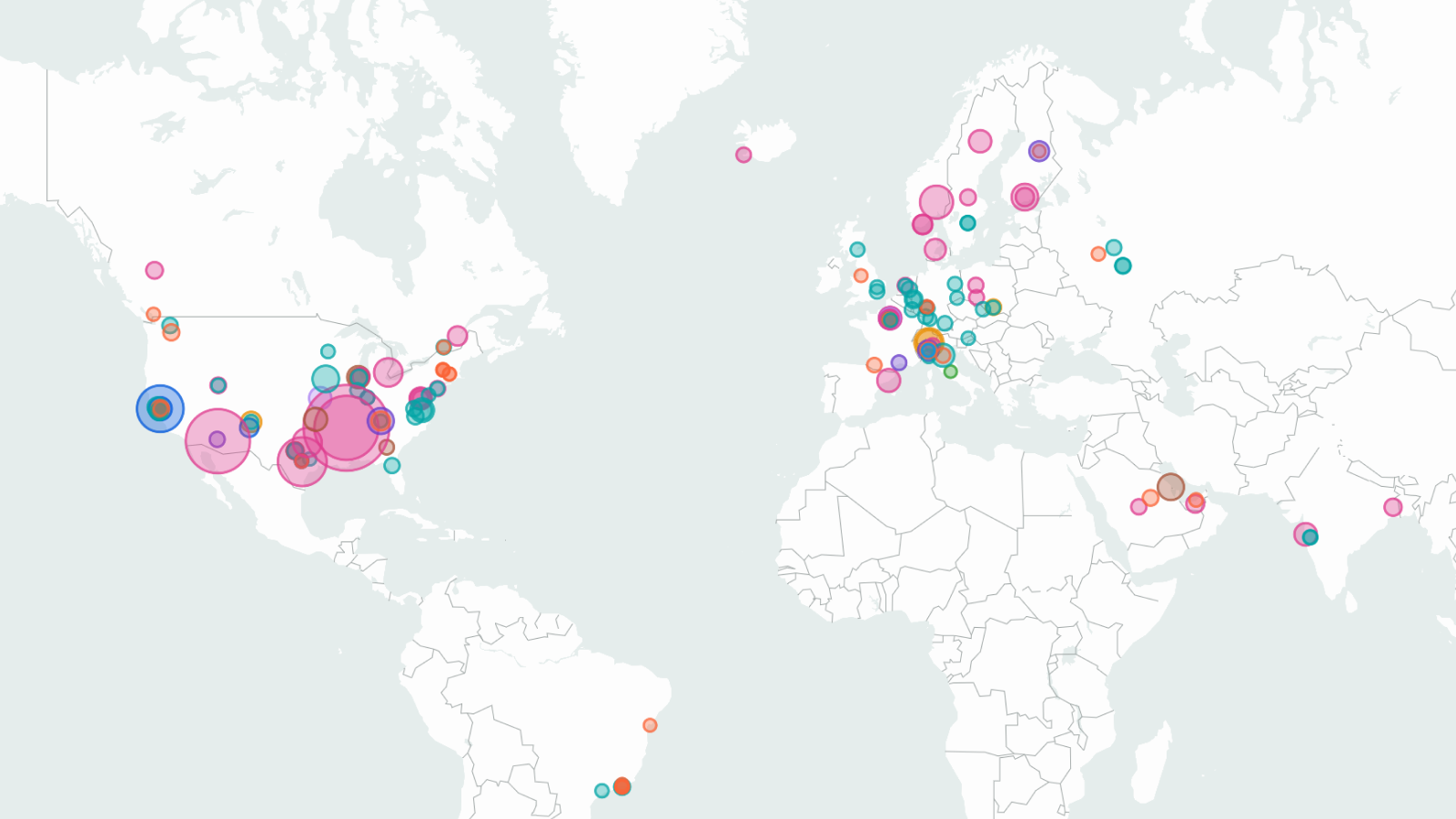

We announce our new Frontier Data Centers Hub, a database tracking large AI data centers using satellite and permit data to show compute, power use, and construction timelines.

Published

Companies are building AI data centers at an unprecedented scale. These facilities have the power capacity of small countries and cost tens of billions to construct. Yet until now, the details of their true capacity and progress have remained opaque. To help the public, researchers, policymakers, and investors understand the scale of this new infrastructure wave, Epoch AI has created the Frontier Data Centers Hub.

This open database tracks the construction and capacity of major AI data centers using satellite imagery, public permits, and other open sources. It’s the most detailed public resource to date on how much power, land, and hardware the largest AI companies are deploying — and when.

The 13 large U.S. data centers tracked in the hub account for a substantial share of total compute stock globally: about 2.5 million (~15%) of the roughly 15 million H100-equivalents that have been delivered to customers in the past several years as of late 2025.

You can read more about how AI data centers work in our explainer “What you need to know about AI data centers” and more about our methodology here.

Power

Power is a key constraint for AI data centers, and the largest AI facilities will soon rival small nations in energy use. A single 1 GW site draws enough electricity to serve around a million U.S. homes.

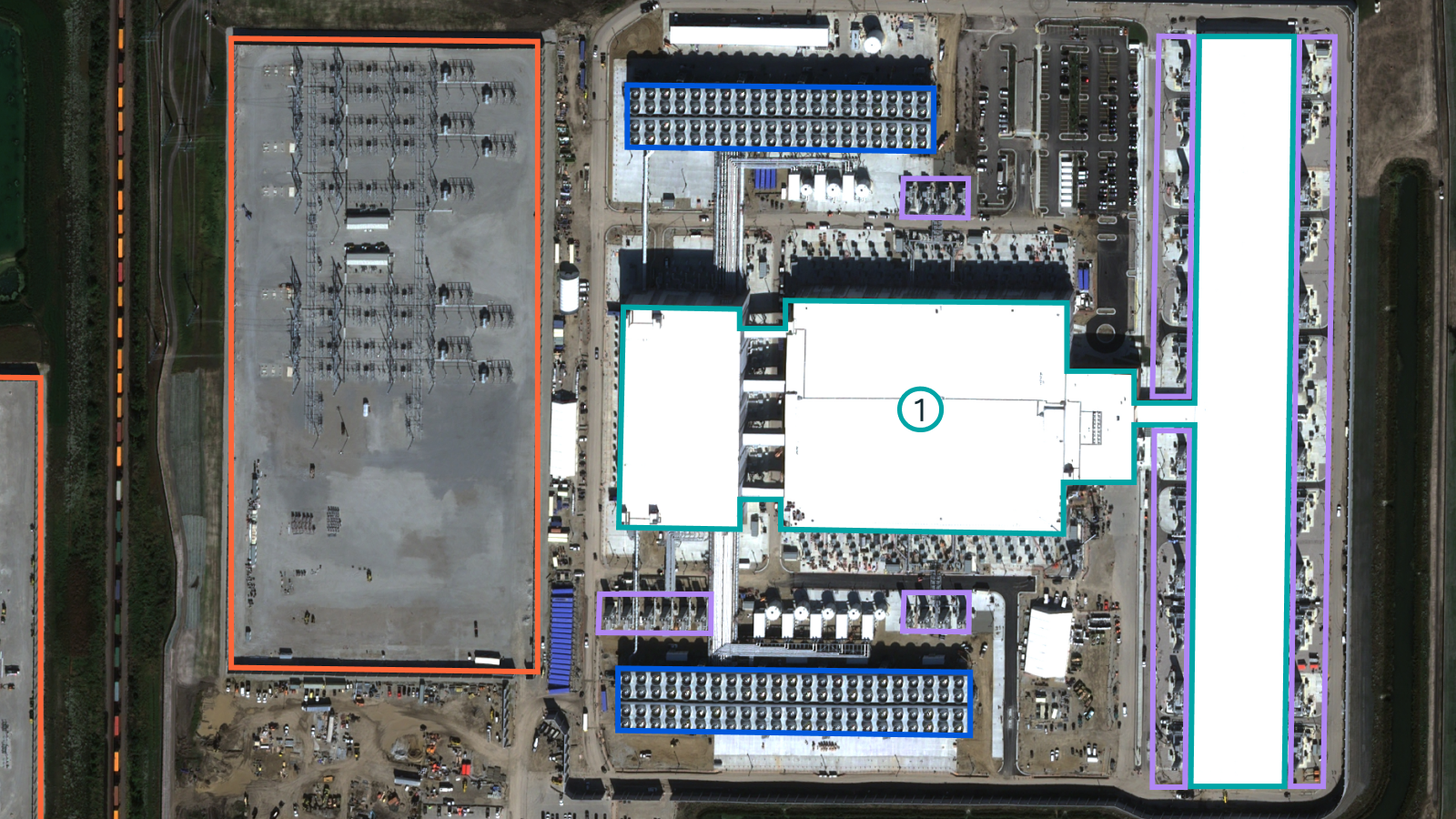

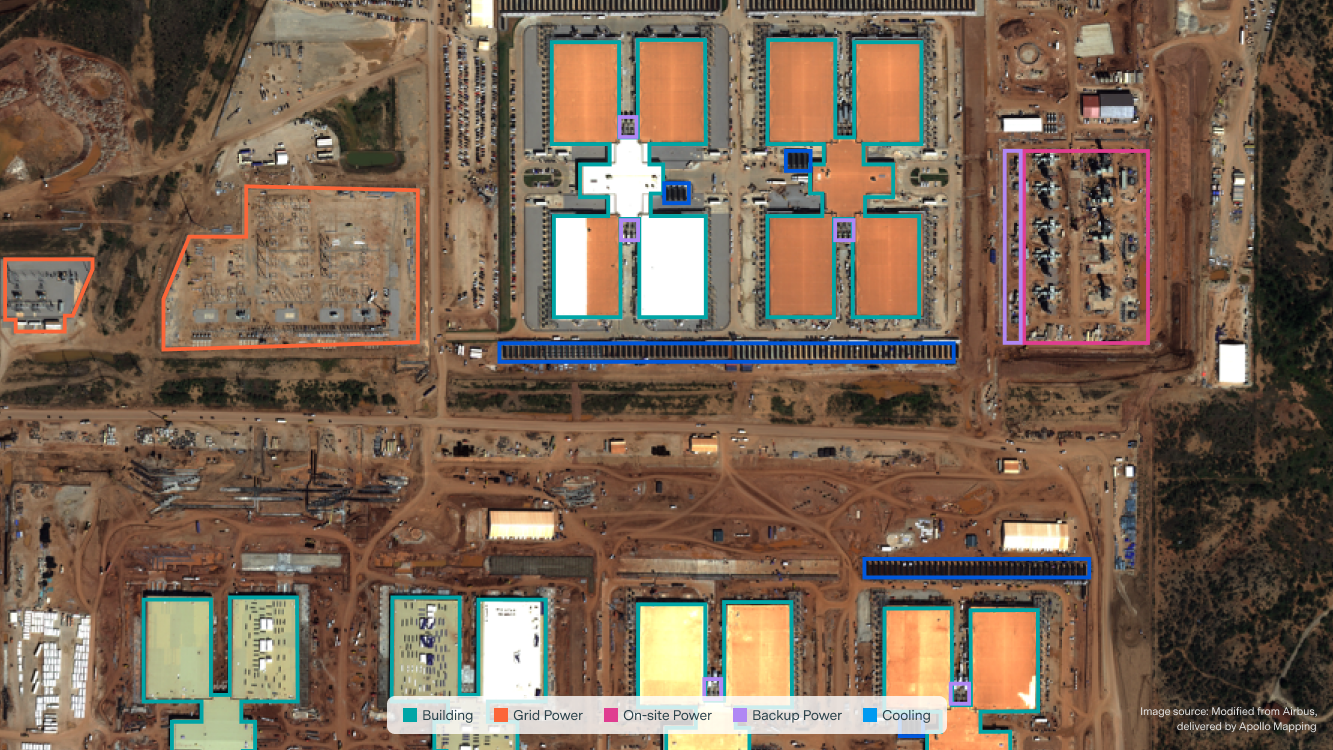

In the hub, we track the total facility power for each site. A data center’s facility power is higher than the power capacity of its IT equipment, typically by a factor of around 1.3. Our estimates draw on high-resolution satellite and aerial imagery, where we identify and measure cooling infrastructure such as chillers and cooling towers to infer the total power capacity of each data center. We corroborate this analysis with supporting documents, e.g. permits and public disclosures by the companies involved.

Our data show that several data centers will likely cross the 1 GW mark of total facility power next year:

- Anthropic–Amazon New Carlisle (January),

- xAI Colossus 2 (February),

- Microsoft Fayetteville (March, borderline 1GW),

- Meta Prometheus (May), and

- OpenAI Stargate Abilene (July).

Among the facilities we have tracked, the time from starting construction to achieving 1 GW of facility power capacity ranges from 1 to 3.6 years, with xAI projecting just 12 months to build Colossus 2.

You can follow the build-out of each data center in the hub’s Satellite Viewer, in which we track their timelines with annotated high-resolution satellite pictures.

Build-out of Anthropic/Amazon New Carlisle.

Image source: Copernicus Sentinel data 2025.

Build-out of OpenAl Stargate Abilene.

Image source: Copernicus Sentinel data 2025.

A key thing that makes large-scale data centers discoverable is their cooling infrastructure. It’s hard to hide the massive chillers required to prevent billions of dollars in equipment from overheating. By analyzing satellite imagery and correlating it with permit documents and hardware specifications, we can estimate capacity within surprisingly narrow margins.

High resolution satellite image of OpenAl Stargate Abilene in September 26, 2025: Building 1 and Building 2 likely fully operational.

Image source: Copernicus Sentinel data 2025. From Airbus, delivered by Apollo Mapping.

Compute

This massive power draw is needed to run the chips that train and operate frontier models. If current trends in the scaling of training runs hold, the largest AI training run in two years could use around 2.5 million H100-equivalents (H100e). H100e is a measure of compute processing performance expressed in units of NVIDIA H100 processing power, with 1 H100e = the peak performance of one NVIDIA H100 GPU.

Using models based on chip type and efficiency trends, we estimate the total computational performance of the GPU in each facility in H100e. The most powerful AI data center in 2026, xAI’s Colossus 2, will have a compute capacity of 1.4 million H100e, compared to only 100,000 for the leading data centers in mid-2024.

But data centers with even more compute capacity are on the horizon. We estimate that Meta Hyperion and Microsoft Fairwater will have the equivalent of 5 million H100s in compute when these massive data centers are completed.

Like many data centers at this scale, Hyperion and Fairwater will come online in stages. Companies do not always clarify these incremental timelines in announcements, which creates confusion about what a data center’s true capacity will be over time.

Based on construction progress and timelines disclosed in permit applications, we forecast that Fairwater will see its first phase operational in March 2026, and will be fully operational by September 2027. Hyperion will likely see its first phase operational in January 2028, with an unclear completion date.

Cost

These frontier AI data centers require immense capital investment. Our data hub makes this easy to track, with estimates of the total capital cost for each data center. This includes the cost of IT hardware, mechanical and electrical equipment, building shell and white space, construction labor, and land acquisition.

We estimate a typical capital cost of $44B per gigawatt of server power, with $30B going to IT hardware and $14B to other costs. But the cost per gigawatt of total facility power is lower, typically closer to $30B, because overheads decrease the number of servers that can be supported.

This typical cost hides a steady rise in the top-end datacenter cost over time. The label “most expensive datacenter at a given time” is dominated by the massive data centers with 1 GW power capacity or more. Microsoft Fairwater is forecasted to be the most expensive data center in the hub: with a total capital cost upwards of $100B upon completion.

We hope that the new Frontier Data Centers Hub will be a valuable resource for anyone interested in understanding the infrastructure behind large-scale compute. We currently cover 13 of the largest AI data centers in the United States, with plans to expand coverage globally.

Start exploring the data now, and stay tuned as we continue tracking the infrastructure buildout that’s shaping the future of AI!

This resource builds on our existing GPU Clusters database but goes much deeper. While the GPU Clusters database relies mainly on public disclosures and media reports, the Frontier Data Centers Hub uses satellite imagery and permitting data to independently verify capacity and construction progress. This shift in methodology makes it a far more detailed view of the physical and economic foundations being built for frontier AI.